It sounds pretty damning. Two recent surveys suggest that Google Shopping isn’t leading searchers to the best prices on products. But the surveys weren’t well documented, nor did they include competitors like Bing, Shopzilla, PriceGrabber and Nextag, which have similar issues. So, Search Engine Land will be running its own fully documented tests. Every few days, we’ll search for an item and show what we found, starting with today’s test, a search for a toaster.

How Much Is That Toaster In The Shopping Search Window?

This is a long story. It’s designed to be that way so that people can understand exactly what was tested and the reasoning for selecting prices to compare, which is lacking in the two other surveys. This type of details is important, especially when lobbing around accusations to the US Federal Trade Commission about potential consumer misleading, as Consumer Watchdog has done.

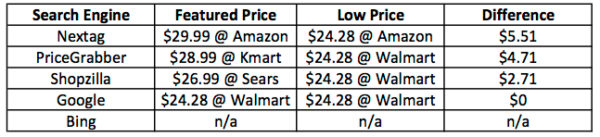

Take the time to read the full story if you want to understand just how very complicated it is to conduct a survey of this nature. But for those who want a quick summary now, the first test shows that Google actually ranked best in featuring a price that matched the lowest price that could be found from a major merchant:

The survey also found that all the shopping search engines have issues in terms of disclosing to consumers why they rank results in the way they do. Several have some serious issues with pricing inaccuracies.

Remember, a single test isn’t enough to draw a conclusion across the entire industry. I’m not sure that running six tests as the Financial Times did is enough, nor the 14 that Consumer Watchdog did is, either. But far more important, the how you test and what you count can produce skewing that can mess up even a large sample.

How Much Is That Toaster In The Shopping Search Window?

Why look for a toaster? That’s one of several items where Consumer Watchdog said its

survey found a $20 price gap between what was listed on Google and what was listed as the lowest price for the same item at Shopzilla, PriceGrabber or Nextag, when it looked for “Cuisinart Classic 2P Slice Toaster.”

Bing wasn’t surveyed, even though it has shopping search ads exactly like Google. Nor did Consumer Watchdog explain the exact query was used or list a model number for the toaster. Nothing was documented. We were only given the end result, and when it comes to comparing shopping search engines, as you’ll see, it’s essential to show your work.

We can’t search for the exact same toaster as Consumer Watchdog did, because we don’t know the model. Plus, even if we did know it, looking for it might cause some to assume Google’s now “fixed” the toaster price problem for that particular model. So, for our test, we’re seeking a Hamilton Beach 2-Slice Metal Toaster, model 22504. Why? It’s one of the best selling toasters at Amazon, so it seems a popular model worth testing.

Which Price Do You Compare To?

At Google, the search began by entering “Hamilton Beach 2-Slice Metal Toaster” into the main search box. That brought back a special area of the search page with shopping search results, as the arrow points to below:

The results in the box are drawn from Google Shopping, which controversially shifted to an all-ad model last year. Only people who advertise are included in Google Shopping listings. The same is true of all the other shopping search engines in today’s test, by the way. Google isn’t some odd exception.

Already, you can see the challenge in deciding how to survey if the move to all-ads is causing lower prices not to be found. Which price in the results do you count? The $26.99 one from Sears, because it’s first? The $24.28 one from Walmart, because it’s lowest? The $34.99 one from JCPenney, because it’s highest? And are these even all the same model? One’s a different color than the others.

Consumer Watchdog’s survey method was to pick one result out of this box and compare it to the lowest price for a particular item that it could find on another shopping search engine, where it may have “drilled down” into the results in order to find that better price. Or perhaps it didn’t. The survey doesn’t make this clear.

The Financial Times, which did its own survey, instead compared prices listed within these results to what searchers might find at Google itself, if they know how to drill-down to get more listings. Again, which price from the results box was used isn’t clear, which matters when you can’t tell easily if you’re comparing the exact same product.

Four Searches To Verify The Lowest Price At Google

Our survey will use what we think is the cheapest price shown out of the results box for the same exact product, as best we can tell. We’ll also compare to what we’ll call the featured merchant price and finally to the best price from any well-known merchant listed.

To do all this, it’s time to “drill-down” into Google’s shopping results. We already did one initial search, and now we’ll do more in the hunt for the best price. The second search happens by clicking on that “Shop for Hamilton Beach….” link the arrow points to in the screenshot above. Doing that brings up this:

That click brought up a list of various items Google thinks matches the search, drawn from Google Shopping, and results that are still all ads. You just get more of them.

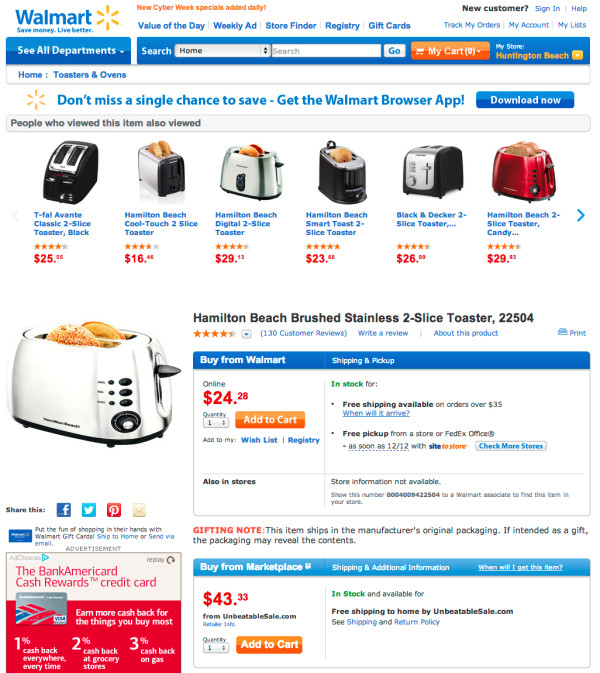

The first listing is for the model we’re after, and clicking on it made the result get larger, with Walmart as the featured merchant offering it at $24.28, before tax and shipping.

Why’s Walmart featured? No idea — nor does the typical consumer know why. It might be they’re paying more. It might be that Google’s complicated system of showing ads based on advertiser “quality score” means Walmart gets a bump. It’s not because Walmart has the cheapest price of the four merchants shown in that box, because in other searches, I know that sometimes the higher price merchant will still get featured.

You can drill-down further, by clicking on the “Compare prices from 50+ stories” link in the box. That constitutes a third search, and doing so brings up this list:

On that list, Walmart still has the lowest base price. But we’re still not being shown all the listings, only the first ten, ranked by whatever mystery criteria Google is using. If you want the lowest price, you have to effectively do a fourth search by clicking on the “Base Price” column heading, which resorts the listings like this:

If you do that, you’ll discover that a merchant called “Unopened Savings” is offering the low, low price of $12.27 for the toaster. But as this is an unrated merchant, a merchant with no recognizable brand, we’re not going to count that for this test. Instead, Walmart still hangs in there with the lowest price.

That’s also a lesson why it makes sense that Google doesn’t just rank merchants by lowest price or even highest customer ratings. Using only those criteria can easily allow extremes that don’t correspond to a good purchase experience to emerge. An unknown retailer offering a too-good-to-be-true price might indeed be too good to be true. A merchant with only a few reviews could easily get the highest customer ratings, based off just a tiny sample. It would be good if Google better explained more about how relevancy is determined for consumers who care, in an easy-to-find location, but the obmission also not abnormal in the shopping search space, either.

Google: Bottom Line, Lowest Price Is Shown

So what does the survey show?

- Lowest price in ad box: $24.28

- Lowest featured price in drill down: $24.28

- Lowest major brand price in drill down: $24.28

For this test, against its own listings, Google did perfect. But are there cheaper prices out there that competing search engines can find?

Bing: No Major Retailers Listed; No Lowest Price

Google’s biggest competitor is

Bing. Here’s what you get for the same search there:

Just like Google, Bing only shows shopping results for those who pay, a change it made earlier this year, but without the controversy Google encountered. These ads appear in a box with a similar format to Google’s.

Unlike the search at Google, the results Bing displayed were terrible. None were for the model that we were after. Some aren’t even for the brand or for the specified two-slice capacity.

Changing to a search for “Hamilton Beach 22504″ to add the model number didn’t improve things much. The right toaster finally appeared, but apparently the only place it’s available is through eBay or a small merchant called Hayneedle, with the lowest price being $36.99:

Unlike with Google, there’s no way to “drill down” into Bing Shopping to search for a better price. That’s because Bing completely killed Bing Shopping earlier this year in favor of an all-ad format. What appears in that shopping ad box is all you get.

Of course, Bing would argue that it does provide “free” shopping results that allow for more inclusivity than Google’s model, through a system called “Rich Captions.” See that second arrow above, pointing to a Walmart listing? That’s Walmart appearing in Bing’s results — and for free — but using a system that displays the price of the toaster, $24.31 and in stock.

So, should that price be counted as Bing’s “low price” from its shopping results? Not if you’re trying to run a survey comparing Google’s shopping listings to Bing, as both Consumer Watchdog and the Financial Times did. That’s because they didn’t seem to use any pricing displayed through Google’s similar — and totally free — Rich Snippets system. Here’s an example of those from Google, for a search on ”Hamilton Beach 22504,” below:

See how complicated trying to compare shopping search engines can be? With Bing, if you did our original search, you didn’t get pricing either through the ad-block or via Rich Captions, unlike with Google. You certainly didn’t get what Bing promised when it killed its shopping search engine earlier this year, a better experience:

You no longer need to waste time navigating to a dedicated “shopping” experience to find what you’re looking for. Based on your intent, we’ll serve the best results.

You absolutely don’t get the ability to see all the shopping results, to determine if Bing is showing the best price from all those it knows about — which is crucial for measuring Bing against the same accusations that Google faces.

For the purposes of our test, we’re going with the Bing pricing shown in the ad block and tagging that as N/A, as there’s no major direct retailer showing it.

PriceGrabber: Low Prices That Don’t Exist

Over to

PriceGrabber, the toaster is listed multiple times, with various prices. As with Google, the challenge is knowing which is the right model for the price comparison:

The first of the listings promising a price “as low as $17.99″ seems good, so let’s drill down by clicking on it:

Where’s that $17.99 price? Oddly, PriceGrabber shoves it way down at the bottom of the page, choosing to push consumers toward K-Mart’s $28.99 listing first. Why? As with Google, who knows. A consumer certainly doesn’t, just as they also probably don’t know that PriceGrabber is almost certainly is using the same all-ads model as Google. I can’t say for certain, because PriceGrabber seems to lack any definitive disclosure page for consumers.

Which price to use for our survey? We could go with the Target price of $17.99, but there’s one problem with that. It doesn’t really exist:

Clicking on the

offer opens PriceGrabber up to potential accusations of bait-and-switch, since the price Target is really selling the toaster for is $24.49. Amazon, which has the next lowest price of $19.99, turns out to really be selling it for $24.28. That’s cheaper than the third lowest price from PriceGrabber, Walmart at $24.31. But since PriceGrabber isn’t actually listing the correct Amazon price, for purposes of our survey, we’ll use the lowest correct price it actually shows: Walmart’s, and at the $24.28 actually shown when you go to Walmart’s site. As for the “featured price,” that would be the first price on the original list, Kmart at $28.99.

Nextag: Pushing Higher-Priced Amazon Listing Over Lower-Priced One

Searching for “Hamilton Beach 2-Slice Metal Toaster” at Nextag proved to be difficult. Well, impossible. No matter what I did, that search just generated no results. In the end, I resorted to a search for “Hamilton Beach 22504,” which came back with this:

As with Google — and PriceGrabber — the lowest price isn’t shown first. Instead, sorting is done by “Best Match” order, whatever that is. Nothing on the page explains it. The help page, if consumers go to it, has no help about it — though at least it discloses that Nextag gets paid by merchants to list them, just as Google does.

Changing the sort to lowest price brings this up:

Now we discover that the lowest price from a major retailer, according to Nextag, is Amazon for $23.77, not from Amazon for $29.99, as it first presented. Except, as it turns out, that $23.77 price doesn’t exist. Going to the offer finds the price is really $24.28 — which matches another Amazon listing that Nextag also shows.

For the survey, the low price will be $24.28, while the featured price will be $29.99 — both prices coming from Amazon, which yes, lists the identical product at two different

price points.

Shopzilla: Inaccurate Prices Shown

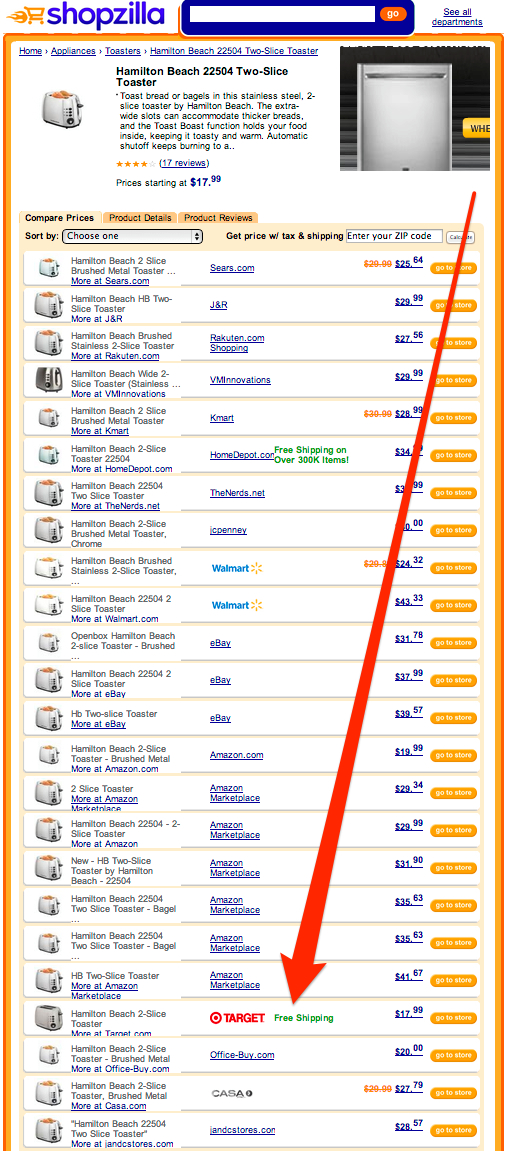

At Shopzilla, “Hamilton Beach 2-Slice Metal Toaster” didn’t find the model we were after, so I did a search for “Hamilton Beach 22504,” which returned this list:

The model appears to be listed three times. If you were to click on any of these listings using the “go to store” link, rather than the small “Compare price at other stores,” you’d pay anywhere between $31.90 to $43.33 for the item. Walmart is listed first at $43.33.

Using Consumer Watchdog’s survey methodology, that’s the assumption of what most consumers would do — click on those links and not try to find better pricing. But let’s try the drill-down. Selecting the first of the listing brought up a page with prices from a variety of merchants:

As with Google, PriceGrabber and Nextag, prices aren’t shown in order of lowest-to-highest. Instead, the now familiar “Best Match” sort order is used. There’s no help page that explains what this means, just as there’s no help page providing any disclosure that all these listings are almost certainly ads.

The “Best Match” sort order means Target, with the lowest price of any major retailer at $17.99, is buried way down on the list. Instead, consumers are pointed first at a Sears offer to buy the toaster for $25.64.

Except that Target low price? It’s really $24.99. And that Sears offer? It’s

actually$26.99:

As it turns out, the lowest price from a major retailer is from Walmart, at $24.32 – which actually turns out to be $24.28, when you go to the page.

Wait — wasn’t Walmart the first listing way back on Shopzilla, offering the toaster for $43.33? Yes, it was. Walmart has a one

page where it oddly sells the same item for two different prices, cheaper if you buy from it directly and more expensive if you buy through a partner:

For the purposes of our survey, we’re considering the featured price on Shopzilla to be the first price you get when you drill-down into the listings, that from Sears, at $26.99 that’s accurately shown in the landing page. The low price is Walmart at $24.28. Counting the Walmart featured listing before doing the drill-down is difficult, because it isn’t accurately showing the actual price.

The Big Wrap-Up: They All Kind Of Suck

As I said at the outset, we’ll run a few more tests like this. I have no doubt that Google, which came out the best in this one, may not do so well in another. But the bigger issue to me is that the entire state of shopping search feels like a big mess.

It’s pretty clear that there’s not a lot of consumer disclosure going on by anyone. That’s despite the fact that I called the FTC’s attention to this issue last year:

- A Letter To The FTC Regarding Search Engine Disclosure Compliance

The FTC’s response was that of what I’ve come to expect from an agency that seems ill-prepared and equipped to understand the complicated search engine space. It did nothing more than encourage search engines to pretty-please disclose ads more:

- FTC Updates Search Engine Ad Disclosure Guidelines After “Decline In Compliance”

Ironically, Google is coming under fresh fire for not disclosing enough when it seems to do far more than some of the shopping search engines that it’s competing with.

The mess of shopping search also, in my view, weakens the argument that Google somehow would be better if it was pointing people to competing shopping search engines even more. Given that several clearly have issues showing fresh, valid prices, that’s just going to jump consumers through even more hoops and frustration.

Rather, it would be good to see them all step-up efforts to improve relevancy in a space that feels largely forgotten, in terms of improving search relevancy. I’m an expert on search engines, and trying to ferret out what these shopping search engines are showing, and how to use them to double-check to get the best price, is exhausting. I feel sorry for the consumer relying on them for that purpose.